AI augmented development, or with a more negative connotation “vibe coding”, has gained traction even in dusty corporate Germany. In the positive sense it means using AI as a multiplier for developer productivity while maintaining human oversight and expertise. In the negative sense it means writing code based on intuition and AI suggestions without deep understanding of what’s happening under the hood. The truth, as so often, lies in between.

Are all programmers and engineers soon to be replaced by our silicon co-workers we helped to create and train? Let’s see how it feels to work with an AI agent.

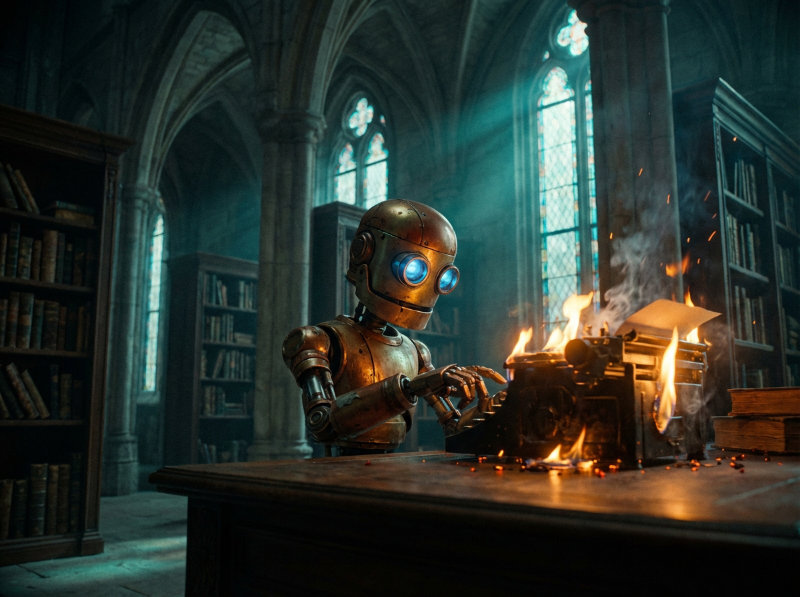

Enchantment 🔗

During the first couple of days it feels like magic. You’ve figured out a couple of good prompts. A good workflow. A process where you somewhat get what you want. You prompt, wait a bit, and sure enough: after a few seconds of blinking, bubbling, and “thinking” hundreds of lines of code have appeared. Freshly generated, just for you. Ready to review. Oh well, ready for production even! Your AI co-worker says so itself. While the AI is doing the hard work, you can do other stuff or watch it toil away while you drink a nice cup of coffee. The review is fine, LGTM, and the PR is merged. Onwards to the next task. You feel super productive. One colleague mentioned that tasks that would usually take him a week or so can now be done in a day. Tasks that would take him a day can now be done in a couple of hours or even minutes.

For some tasks I agree. But for others I don’t.

Disenchantment 🔗

Depending on the prompt and the mood of the LLM, the generated code can look pretty good. You read the code it has written. It makes sense at first. It looks fine. Alas, the devil is in the details. When you have to actually work with the code, lots of stuff surfaces that you would do different, is usually solved otherwise in the code base, or doesn’t quite fit right. There are inconsistencies everywhere:

- Suddenly emojis appear in commit messages, code, and readme files

- Error handling patterns get ignored

- New methods appear on structs because it was convenient to do so

- Clearly defined requirements are left out with a comment that this will have to be solved later (“For now, …”)

- You sometimes get more than you asked for, but often not in a good way

- Duplications and validations through every layer. The AI neither knows nor cares about already existing “helper” functions

- Hallucination of library methods or functionality (though this is getting rare)

- If AI-generated code is rubbish, tests for this code will test the rubbish and will therefore also be rubbish.

You can mitigate all of the above with good context, spec-driven development, and shrewd use of MCP servers. But a thorough human review is still necessary. I get the feeling that if I had coded everything myself from the start, I would be equally fast if not faster. I don’t have to tidy up and explain everything again after the agent runs out of the context window. Moreover, I would have a better overview and understanding of what is actually happening. Plus, instead of focusing on proper software engineering, I get the feeling that developers increasingly just rely on tweaking their AI workflow: MCP servers, AGENT.md and CLAUDE.md best practices, prompt engineering, bundling multiple agents together in a hot mess burning millions of tokens in a couple of minutes, creating new harnesses for the agents, tools replacing Markdown plans, context enhancers, etc. It is harder than ever to keep track of development in software engineering.

In all fairness: until now each increase in version number of an AI agent has significantly improved the quality of its generated code. You don’t need to hold the agents’ hand that much anymore. Also, prompting got way easier. If the trend continues like this, we might be indeed looking into a bleak future for software developers.

Gambling with code 🔗

Sometimes, even with great primed context, the AI fumbles. After the first few edits you already see that this is going nowhere you wanted it to go. Or it looks pretty good already, so you ask for improvement, some changes here and there. You try to guide the agent to the solution you have in mind. This process is time-consuming and annoying and can lead to frustration. But then, every so often, you start clean. A fresh context, only the “default” project files (e.g. CLAUDE.md) are primed. The AI would magically exactly do what you want. That is what keeps you going. At some point I was reminded of a slot machine. You pull the lever, the machine does something, and either it works out, you win, and you get a dopamine hit. Or you lose and have to try again. Compare this to an AI: the prompt is the lever. You pull. You either get what you want (good code and a dopamine hit). Or, it’s crappy code. You revert and try again with refined prompts and context. Repeat. That you don’t always get what you want is one of the key principles of keeping people on the machines. Now, I don’t accuse the AI agent makers of wanting to turn us into gambling addicts. Still, we run into a sort of addiction to the machine. Maybe addiction is too strong a term. But it is certainly a dependency. You depend or rather rely on the agent. When you try to code something yourself again after weeks of AI-augmented coding, it feels the same way as in the olden days when stackoverflow or your internet connection weren’t working. You are stuck. You fumble for the keywords of your programming language, grasp for the syntax, idioms, and patterns. This is scary. At least for me.

PRs all day, everyday 🔗

There is another downside to AI coding. Working with an AI agent feels like a whole day filled with code reviews. A never-ending flow of Pull Requests, written by a co-worker that oscillates between an eager junior developer on cocaine and a knowledgeable senior engineer. It is like working with a colleague that has a borderline personality. You never know which one you get. Problems are being solved with confidence. There is always an answer. Even if the answer is pure garbage.

After a couple of days, I feel this is exhausting. If you work with code yourself, you should be familiar with “the flow”. You happily code away. You are in “the zone”. Time flies by, you know exactly what to do. Lines of code drop from your fingers on the keyboard like diamonds. After a bit of refactoring and testing, you are satisfied. The day flew by, you feel fulfilled. This is why I became a programmer. Coding is fun. I like to code. Some might argue that it is no creative work, but I disagree. Extracting interfaces, make code more readable, introducing abstractions, having nice tests. All that brings me joy. And now? Now I just look at a terminal window where the AI explains its “thinking” in more or less humorous sentences. At some point you only skim over the generated code. This feels wrong. There is no flow anymore. My mind tends to wander when the AI generates stuff. I do other things, I lose focus, I get distracted more easily. I notice I feel more exhausted after an AI-assisted coding session than I would normally feel after hours of coding everything myself. Plus nervous, anxious, and fidgety. What am I doing? My usage window just reset. I could be “coding”. Precious tokens are going to waste just because I am not on my machine. This is crazy.

Code Ownership 🔗

I used to be proud of the code I had written. I wrote tests, I wrote code, I refactored, I tested. Each piece of software became a bit of “my baby”. But with AI-generated code? I don’t feel anything. It is like someone else wrote it. And technically someone else did write it. I have no relation to this code. I may be the one who prompted it. But there was no writing. There was no cursing, no browsing for answers, no research, no debugging, and no praying to all gods above (if any) to make the code work. I lose touch with the code. There is no “learning things the hard way” anymore. The AI solves all. And if it doesn’t, frustration is higher than ever.

Conclusion 🔗

There are a lot of “sometimes”, “from time to time”, “every so often” in this article. And for a programmer that is part of the problem. What we want and what we need is consistency and determinism. When a program is non-deterministic, this is usually seen as a bug. With AI, we accept it as a given.

All is lost then, you say? Definitely not. But we have to see AI as just another tool in our toolchain. It should not replace reasoning about problems and code, nor should it write every line of code for you. What is it good for? A lot of things, actually.

- Reviewing: AI reads code significantly faster than any human ever could. It can make suggestions that are not just “this is wrong” but also “this is the right way to do it” or “this will make the code better”

- Scaffolding: Let the AI generate the boilerplate code. You can focus on the important parts of the project

- Wiring stuff together: For example, properly integrate a service with the REST API

- Explaining code: Let the AI explain complicated parts of source code in plain English.

- Researching: Let the AI look for great libraries, patterns, and best practices.

- Planning: You might have sketched out a rough idea of how to implement a feature. Let the AI help you to refine it.

- Brainstorming: No idea how to start? Let the AI generate ideas for you.

- Exploring: Implement multiple solutions, each with a different approach, and get a better understanding and feeling for which feels right for the project. Or implement each solution with a different library to see which one appeals to you more.

- Delegate annoying tasks: fix linting issues, write documentation, replace one library with another.

- Debugging: As with code review, the AI can read code much faster than you. Let it detect issues and pinpoint likely places for bugs while you go through the code yourself

- Finishing touch: You solved the core problem of an issue, so the “interesting” part is over? Let the AI write code and tests for edge cases

Using natural language to write code feels in my mind like a transitory or intermediate state. This intermediate state seems almost comical. We used to invent and improve programming languages so that humans could better read and write code to communicate with machines. Now we teach machines how to write code with these programming languages so that they can communicate with other machines, but humans find it increasingly hard to understand.

At some point in the future maybe AI models will just directly write machine code, and we basically work with a black box. Tell the AI what needs to be changed. A bit like talking to JARVIS from the Marvel Universe or the computer in Star Trek. We don’t know the insides. It just does it. But we are not there yet.